Beyond the Token: Is VL-JEPA the End of the LLM Era?

Meta’s VL-JEPA research challenges the foundations of modern AI. Explore why leading researchers believe token-based language models may not be the future of intelligence.

On December 11, 2025, Meta released a research paper that sent shockwaves through the artificial intelligence community. Co-authored by AI pioneer and Meta’s Chief AI Scientist, Yann LeCun, the paper introduces VL-JEPA (Vision Language Joint Embedding Predictive Architecture).

For years, the tech world has been obsessed with Large Language Models (LLMs) like GPT, Llama, and Gemini. But this new research poses a fundamental, almost existential question: Is this the end of the LLM era, or do these models still represent the future of intelligence?

To answer that, we need to look under the hood of how AI works today and why some of the greatest minds in the field believe we need a complete change in direction.

The Current State of AI: The Auto-Regressive Trap

To understand why VL-JEPA is a breakthrough, we first have to acknowledge the limitations of the AI systems we currently use. Models like ChatGPT or Gemini are auto-regressive.

How Auto-Regression Works

When you ask an LLM a question, it doesn't "think" of the whole answer at once. Instead, it generates text one token at a time, moving from left to right.

- It predicts the first word.

- That word is then fed back into the model as input.

- It predicts the second word based on the first.

- The process repeats until the sentence is finished.

Example:

If the model is generating the sentence "My name is Arohi," it first predicts "My." Then it uses "My" to predict "name." Then it uses "My name" to predict "is," and so on.

The Problem with This Approach

While this produces impressively human-like text, it has three massive flaws:

- No Upfront Planning:

The model doesn't know the end of the sentence when it starts the beginning. It is effectively "winging it" word by word.

- Computational Cost:

Generating one token at a time is slow and requires massive amounts of processing power.

- Lack of True Understanding:

These models rely on the statistical probability of word sequences (language structure) rather than a genuine grasp of the physical world.

Yann LeCun’s Critique: Scaling is Not Intelligence

Yann LeCun has been a vocal critic of the idea that simply making LLMs bigger will lead to human-level intelligence. He argues that scaling is not the same as intelligence.

According to LeCun, larger datasets, more parameters, and longer context windows are just "more of the same." They don't solve the core issue: Thinking happens through concepts, not tokens.

> "Intelligence is not about predicting the next word. It is about understanding the world around you. Language is just a way to express thought, but the thought itself exists independently of the words used to describe it."

This philosophy is the foundation of VL-JEPA.

What is VL-JEPA?

VL-JEPA stands for Vision Language Joint Embedding Predictive Architecture. Unlike traditional models that focus purely on text, VL-JEPA is a visual-language model. It is designed to understand images, videos, and text simultaneously.

However, the real breakthrough isn't just the multi-modal data—it’s the way it learns.

Non-Generative Learning

Most AI models today are *generative*—they try to fill in missing pixels in an image or missing words in a sentence.

VL-JEPA is non-generative.

Instead of trying to recreate every detail of a scene (like the exact texture of a leaf on a tree), it predicts semantic embeddings. It ignores the noise and focuses on meaning. It builds an internal understanding of what it sees rather than learning how to describe it word by word.

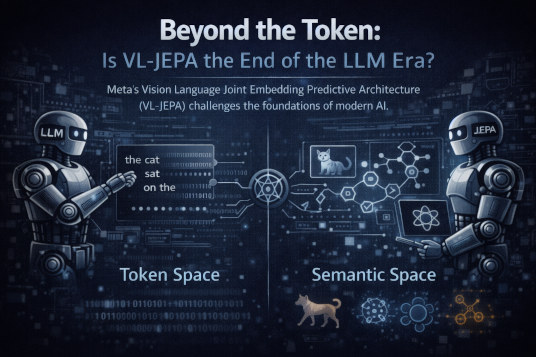

Deep Dive: Semantic Space vs. Token Space

To understand why this is a revolutionary shift, we need to examine how information is organized inside the AI’s “brain.”

In AI systems, data is represented using vectors (lists of numbers) arranged in a mathematical space.

1. Token Space (The LLM Way)

In token space, the AI focuses on words, sub-words, grammar, and punctuation. It maps how words relate to each other statistically.

- Focus: What word comes after "The cat sat on the..."

- Result: Excellent grammar and mimicry, but weak logic and physical understanding.

2. Semantic Space (The VL-JEPA Way)

In semantic space, the AI organizes information based on meaning and concepts, regardless of the specific words used.

| Feature | Token Space (LLMs) | Semantic Space (VL-JEPA) |

|---|---|---|

| Unit of Thought | Individual words/tokens | Abstract concepts/vectors |

| Focus | Probability of the next word | Relationship between ideas |

| Example | "Dog" and "Puppy" are different words | "Dog" and "Puppy" are nearly identical vectors |

| Processing | Sequential | Holistic |

The "Dog" Example

In semantic space, the sentences "A dog is running" and "A puppy is playing" are placed very close together because their meanings are similar. Conversely, "A dog is running" and "A car is parked" are placed far apart.

By working in semantic space, VL-JEPA can reason about the relationship between a falling ball and the concept of gravity without generating a single word of text.

Why This Changes Everything

The shift from token-based prediction to semantic-based prediction offers three major advantages:

- Faster Reasoning:

The model processes ideas, not sequences, allowing it to jump directly to conclusions.

- Efficiency:

VL-JEPA requires significantly fewer parameters than traditional LLMs to achieve high-level reasoning.

- Human-Like Logic:

Humans think in concepts, not characters. VL-JEPA aligns more closely with biological cognition.

The Vision: The "World Model"

LeCun does not see VL-JEPA as just another model—he sees it as a World Model.

A World Model understands cause and effect. It knows that if you push a glass off a table, it will fall. It understands how the world changes over time.

Are LLMs Dead?

The short answer is no.

But their role is changing.

In future AI systems:

- The World Model (JEPA): Handles reasoning, planning, and understanding reality.

- The LLM: Acts as the language interface, translating abstract thoughts into human-readable text.

Conclusion: A New Direction for AI

VL-JEPA represents a pivot from “AI that talks” to “AI that understands.” By moving beyond auto-regressive token prediction and into joint embedding predictive architectures, Meta is laying the groundwork for AI that can reason about the physical world.

We are moving away from a time when AI was merely an advanced form of autocomplete and toward a future where machines possess a foundational understanding of concepts, physics, and logic.

Engineering Team

The engineering team at Originsoft Consultancy brings together decades of combined experience in software architecture, AI/ML, and cloud-native development. We are passionate about sharing knowledge and helping developers build better software.

Related Articles

Generative AI in Production Systems: What Developers Must Get Right

Moving Generative AI from demos to production is no longer about prompts. In 2026, success depends on architecture, cost discipline, observability, and trust at scale.

From Zero to MLflow: Tracking, Tuning, and Deploying a Keras Model (Hands-on)

A hands-on, copy-paste-ready walkthrough to track Keras/TensorFlow experiments in MLflow, run Hyperopt tuning with nested runs, register the best model, and serve it as a REST API.