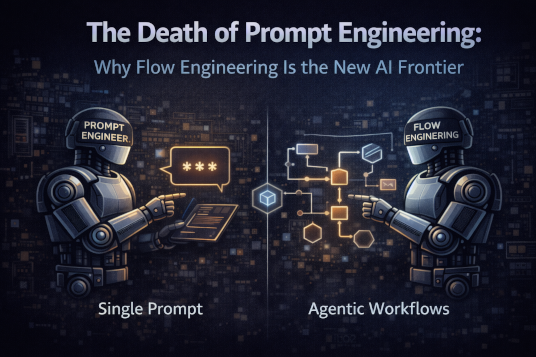

The Death of Prompt Engineering: Why Flow Engineering Is the New AI Frontier

Prompt engineering is no longer enough. Learn why flow engineering and agentic workflows now define how reliable, scalable AI systems are built.

For the last two years, the tech world has been obsessed with “the prompt.”

We were told that Prompt Engineer would be the hottest job of the decade—that if you could just discover the perfect sequence of “magic words,” you could unlock the god-like capabilities of Large Language Models (LLMs).

Entire courses, marketplaces, and job descriptions emerged around this idea. Developers swapped prompt snippets the way front-end engineers once shared CSS hacks. “Take a deep breath.” “Think step by step.” “You are an expert.” We treated language models like mystical artifacts that responded to incantations.

But the numbers are in, and they tell a very different story.

The era of obsessive prompt tweaking is over.

We have entered the era of Flow Engineering.

The Data Doesn’t Lie: Why a Weak Model Can Outperform a Strong One

The most compelling evidence for this shift comes from recent benchmarks shared by Andrew Ng, one of the most influential figures in modern AI.

Using the HumanEval coding benchmark, researchers compared traditional single-prompt approaches with structured agentic workflows.

The results were staggering:

- GPT-4 (Single Prompt): 67.0% accuracy

- GPT-3.5 (Single Prompt): 48.1% accuracy

- GPT-3.5 (Agentic Workflow): 95.1% accuracy

Read that again.

An older, significantly less “intelligent” model didn’t just compete with GPT-4 — it obliterated it.

Not because someone discovered a clever new prompt.

Not because the wording was better.

But because the architecture around the model changed.

This is the moment many engineers miss: model capability is no longer the dominant variable. System design is.

Prompting Was Never Engineering

A single prompt is a shot in the dark.

You press Enter and hope the probabilistic weights of the model align just right. If it fails, you tweak a word, add another instruction, or try again. This is trial-and-error experimentation — not engineering.

Prompt engineering optimized for luck.

Flow engineering optimizes for repeatability.

Real production systems don’t tolerate luck. They demand:

- Deterministic behavior

- Measurable improvement

- Graceful failure modes

A prompt can’t give you any of that.

From Single Shots to Systems

Flow Engineering (often implemented as agentic workflows) treats the LLM as one component in a larger system, not the system itself.

Instead of asking the model to:

> “Analyze this data, reason about it, write code, verify the result, and explain it to me”

We decompose the task into explicit steps, each with a clear responsibility.

The AI no longer improvises everything at once.

It collaborates with itself — and with tools — inside a structured flow.

This mirrors past architectural shifts:

- Monoliths → Microservices

- Scripts → Pipelines

- Ad-hoc decisions → Reproducible systems

The New Pillars of AI Development

To build reliable AI systems in 2026, three things matter far more than prompt wording.

1. Context Is the New Fuel

It’s not just about what you tell the AI to do — it’s about what it can see.

Modern systems prioritize:

- Retrieval-Augmented Generation (RAG)

- Dynamic context windows

- Structured inputs over verbose instructions

A mediocre model with perfect context will outperform a frontier model guessing in the dark.

Context isn’t fluff — it’s data engineering.

2. Architecture Beats Intelligence

Architecture defines:

- Where decisions are made

- How errors are handled

- Which steps are reversible

- Where humans can intervene

A well-designed flow makes failure local and recoverable.

A giant prompt makes failure global and catastrophic.

This is why AI reliability is now a system design problem, not a prompt-writing one.

3. Workflow Is How Intelligence Emerges

Intelligence isn’t a single action — it’s a process.

Modern AI systems model collaboration:

- One agent gathers information

- Another synthesizes it

- Another validates it

- Another formats or executes

This mirrors how high-performing human teams work — intentionally.

Four Agentic Patterns Driving the Shift

If you want to move beyond prompt tinkering, these four patterns define modern AI systems.

1. Reflection Loops

In a reflection loop, an agent generates output, then critiques it.

Examples:

> “Here is the solution. Now find three flaws.”

> “Assume this is wrong. Explain why.”

Reflection breaks the forward-only generation trap and dramatically reduces hallucinations and logic errors.

2. Tool Integration

Guessing is optional now.

Flow engineering gives agents access to:

- APIs

- Databases

- Web search

- Code interpreters

- Domain-specific tools

An AI that can verify beats an AI that can sound confident — every time.

3. Explicit Planning Phases

Before execution, the system forces the model to plan.

The plan is:

- Structured

- Inspectable

- Debatable

Separating planning from execution turns generation into controlled execution, not improvisation.

4. Multi-Agent Systems

We are moving away from the myth of the “generalist AI.”

Instead, we build teams of specialists:

- A planner

- A researcher

- An executor

- A critic

- A formatter

Each agent has a narrow role, limited permissions, and clear success criteria.

This is how reliability scales.

Why This Matters for Real Businesses

Prompt engineering scaled poorly because it is:

- Fragile

- Undocumented

- Impossible to reason about

- Prone to silent failure

Flow engineering scales because:

- You can version it

- You can test it

- You can monitor it

- You can improve one step at a time

This is why enterprises now invest in:

- Agent orchestration frameworks

- LLM observability

- Workflow versioning

- Cost-aware execution graphs

The industry has already moved on.

Conclusion: Stop Chasing Words, Start Building Systems

The “magic word” era of AI is over.

It was exciting. It got us started.

But it was never sustainable.

The future belongs to builders who understand:

- Prompts are inputs

- Flows are products

- Architecture is intelligence

You can’t debug a prompt.

You can’t monitor a prompt.

You can’t build a business on a prompt.

But you can do all of that with a well-designed flow.

Stop chasing words.

Start engineering intelligence.

Engineering Team

The engineering team at Originsoft Consultancy brings together decades of combined experience in software architecture, AI/ML, and cloud-native development. We are passionate about sharing knowledge and helping developers build better software.

Related Articles

Building Production-Ready AI Agents: A Complete Architecture Guide

In 2026, the gap between AI demos and real tools is defined by agency. This guide explains how to architect, orchestrate, and operate AI agents that can be trusted in production.

Building Intelligent Swarm Agents with Python: A Dive into Multi-Agent Systems

Swarm agents and multi-agent systems let specialized agents collaborate to solve complex workflows. This article walks through a Python implementation and explores why MAS matter.

Unlocking the Power of CrewAI: A Comprehensive Guide to Building AI-Driven Workflows

A practical guide to building multi-agent workflows with CrewAI—how agents, tasks, crews, and tools fit together, plus six real scenarios like job search automation, lead generation, and trend analysis.